GPUTejas Overview

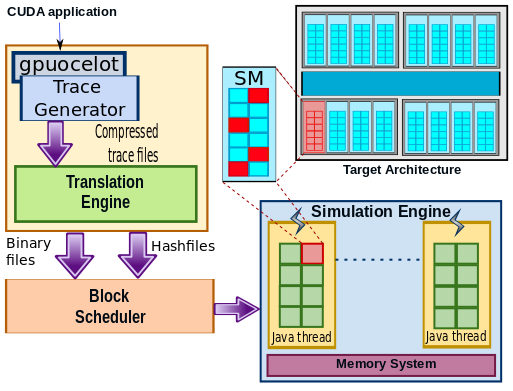

GpuTejas is a highly configurable simulator and it can seamlessly simulate advanced GPU architectures such as Tesla, Fermi, and Kepler. A CUDA executable is given as an input to the simulator. We first use an instrumented version of Ocelot to run the executable, and then generate a set of trace files. These trace files primarily contain information regarding the instructions being executed, including the instruction type, instruction pointer (IP), and the corresponding PTX instruction. Note that (PTX) (Parallel Thread Execution) is an intermediate device language, which has to be converted into device specific binary code for native execution. The trace additionally contains the list of memory addresses (if it is a load/store instruction) accessed by an instruction. We also embed some metadata along with every trace file. The metadata lists the number of kernels, the grid sizes in each kernel, and the number of blocks present in each kernel.

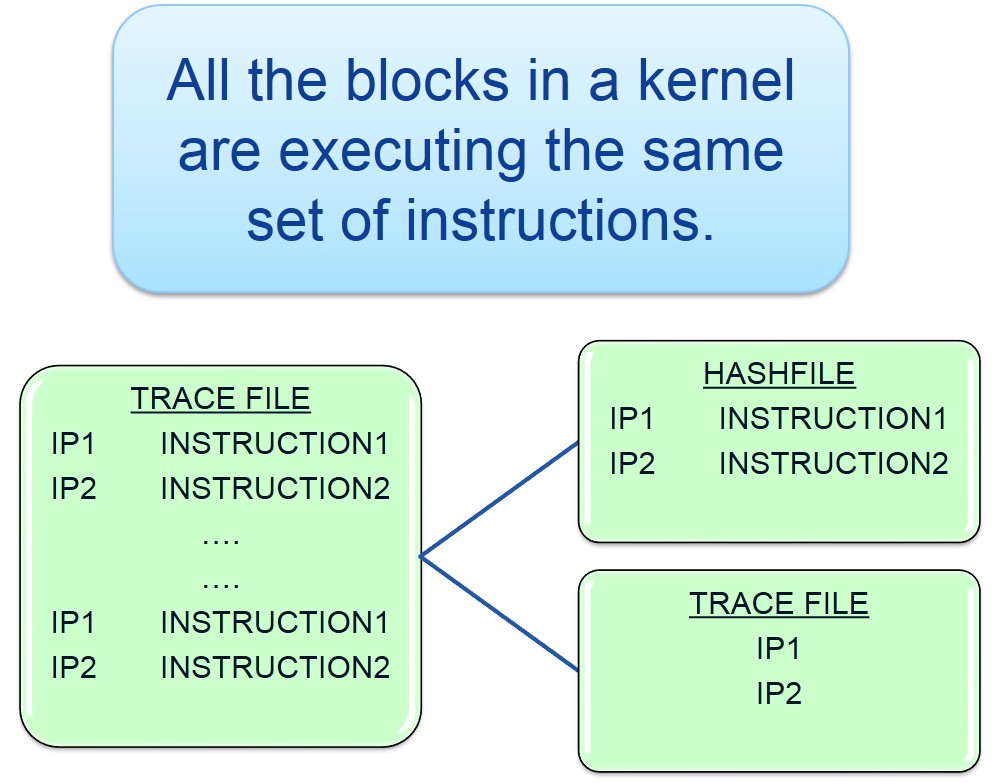

These traces undergo another pass to reduce the size of the files. It was observed that all the blocks of a kernel contain the same set of instructions. Thus, redundant information was getting saved in the trace files for every block. We stored the information regarding these instructions separately in a hashfile. Our post-processing scripts subsequently generate new trace files that only contain the instruction pointers of instructions. These instruction pointers map to the actual instructions in the hashfile. Note that, these instructions are translated to specific instruction classes before storing them into the hashfiles. This further reduces the space occupied by the traces being generated.

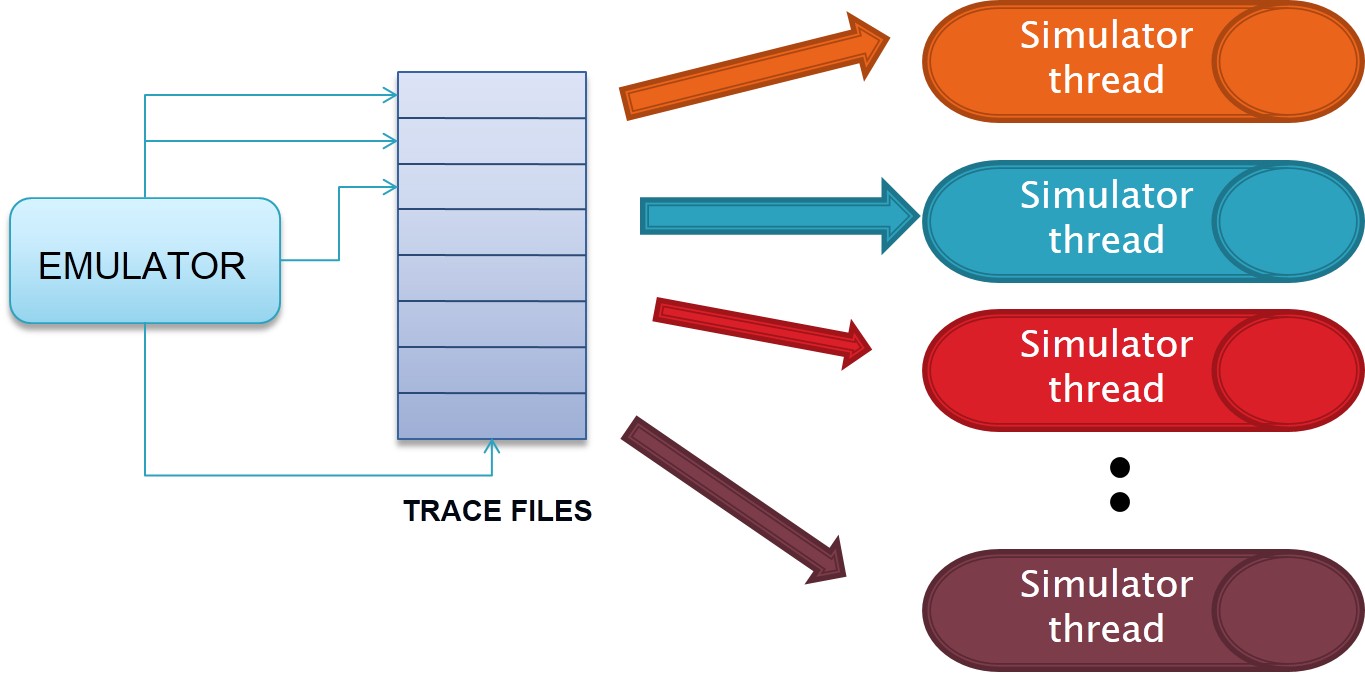

The trace files generated in

the second pass are read by the waiting Java simulation

threads. The Java based simulator threads model the GPU,

and the memory system. They are responsible for generating

the timing information, and detailed execution statistics for

each unit in the GPU, and the memory system.

The NVIDIA Tesla GPU contains a set of TPCs (Texture

Processing Clusters), where each TPC contains a texture

cache, and two SMs (Streaming Multiprocessors). Each SM

contains 8 cores (Stream Processors (SPs)), instruction and

constant caches, shared memory and two special function

units (can perform integer, FP, and transcendental oper-

ations). A typical CUDA computation is divided into a

set of kernels (function calls to the GPU). Each kernel

conceptually consists of a large set of computations. The

computations are arranged as a grid of blocks, where each

block contains a set of threads. The NVIDIA GPU defines

the notion of a warp, which is a set of threads (typically

in the same block) that are supposed to execute in a SIMD

fashion. We simulate warps, blocks, grids, and kernels in

GpuTejas.

We parallelize the simulation by allocating a set of SMs

to each thread. In our simulation, each SM has its own local

clock for maintaining the timing of instructions. Memory

instructions are passed to the memory system, which sup-

ports private SM caches, instruction caches, constant caches,

shared memory, local memory and global memory. The

important point to note here is that different Java threads do

not operate in lock step. This can potentially create issues in

the memory system, where we need to model both causality

(load-store) order and contention. We adapt novel solutions to model these issues.